Artificial Intelligence (AI) is no longer a mere experiment; it is a revolution. It has established itself as a strategic lever for any company wishing to innovate and remain competitive. However, behind the promises of performance lie very real risks: algorithmic bias, privacy breaches, and regulatory non-compliance.

To fully benefit from AI, deploying models is not enough: you must structure effective AI governance. Before even considering operational steps, certain foundations must be laid to ensure security, compliance, and added value.

In this article, we present the 3 concrete prerequisites that will allow your organisation to build solid AI governance, ready to support innovation.

Defining a Dedicated AI Organisational Structure

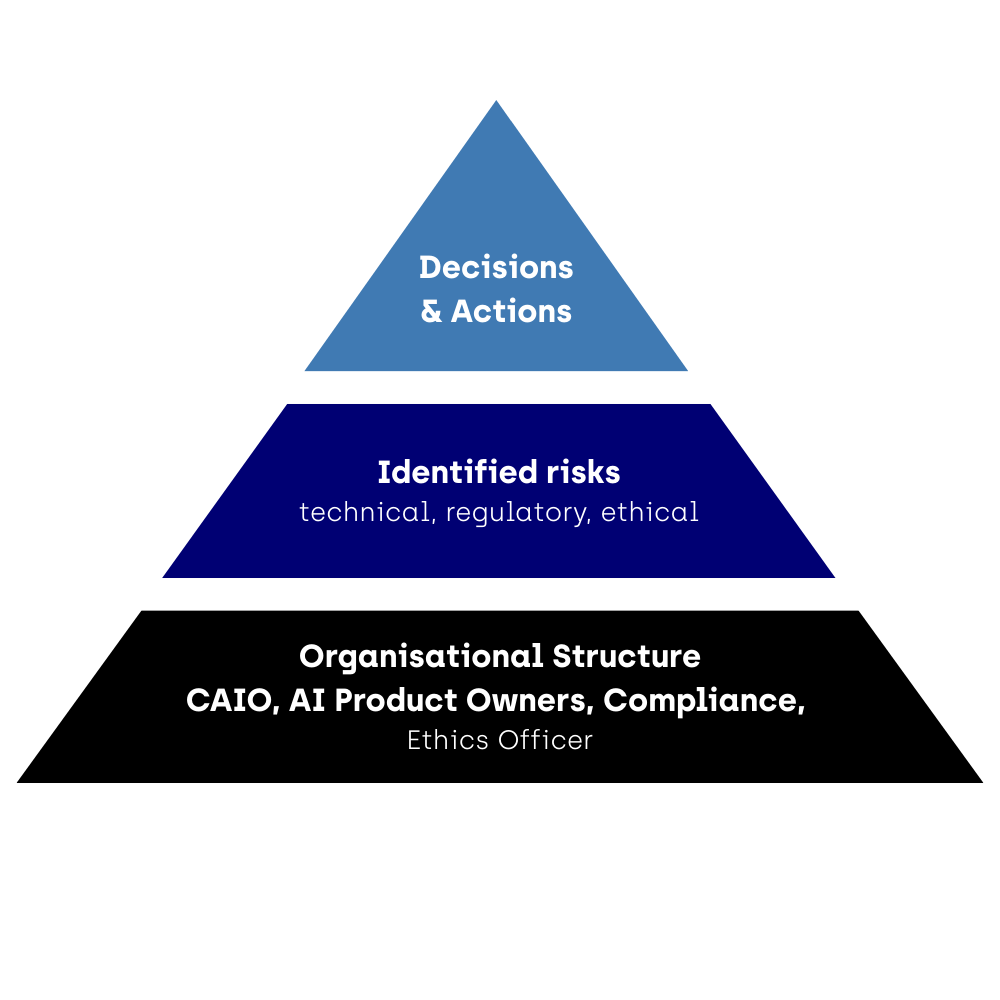

The first cornerstone of any successful AI governance is a clear and accountable organisation. Without well-defined roles, AI initiatives risk becoming fragmented, and associated risks may go unnoticed. This is not just a matter of an org chart; it is a true architecture of responsibilities.

Roles and Responsibilities

- Chief AI Officer (CAIO): Oversees the company’s AI strategy, prioritises projects based on risks and opportunities, and acts as the bridge to the executive board.

- Data & AI Product Owners: Responsible for AI projects within each business unit, ensuring operational follow-up and model compliance.

- Compliance Officer / Risk Manager: Audits AI initiatives to ensure they comply with local and international regulations (EU AI Act, UK, US, etc.) and internal security and ethics standards.

- Ethics Officer / AI Ethics Committee: Validates sensitive use cases, identifies potential biases, and proposes corrective actions.

Admittedly, this organisational chart may seem ambitious, especially for smaller structures. Not every company needs to recruit a full-time Chief AI Officer or Ethics Officer. The key is to clearly define responsibilities and ensure they are covered, whether by an individual, a cross-functional committee, or shared roles.

Committees and Working Groups

- AI Governance Committee: Conducts regular reviews of all AI projects, evaluating their risks and performance metrics.

- Cross-Departmental Working Groups: Facilitate communication between IT, Data, Business units, and Legal departments.

Concrete Example

Several companies have established monthly AI Governance Committees where each department presents its project status, identified risks, and decision logs. This centralised approach prevents “shadow AI” (isolated initiatives) and ensures that all practices remain aligned with corporate standards.

Data and Reference Mastery: The Indispensable Foundation

Effective AI governance relies, first and foremost, on data quality and reliability. Without a solid foundation, even the best algorithms lose relevance and expose the company to biases or erroneous decisions.

It is therefore essential to structure and document common frameworks: definitions, nomenclatures, and source typologies.

Another major challenge is traceability: knowing where the data originates, how it is transformed, and the conditions under which it is used.

To make this foundation operational, organisations must establish a “Data Chain of Trust”:

- Collection – Identify relevant sources and verify their legitimacy.

- Secure Storage – Guarantee confidentiality, sovereignty, and GDPR compliance.

- Frameworks & Standards – Document a common nomenclature.

- Quality Controls – Implement continuous validation rules.

- Responsible Use – Ensure traceability of use cases and prevent any drift or misuse.

Concrete Best Practices

- Implement a Data Catalog to inventory and qualify all datasets.

- Standardise Metadata to simplify data sharing and auditing processes.

- Monitor Quality Continuously through automated detection and alerts for data drift.

- Document Sensitive Datasets (e.g., HR, credit, healthcare).

Scaling AI Governance and Tool Integration

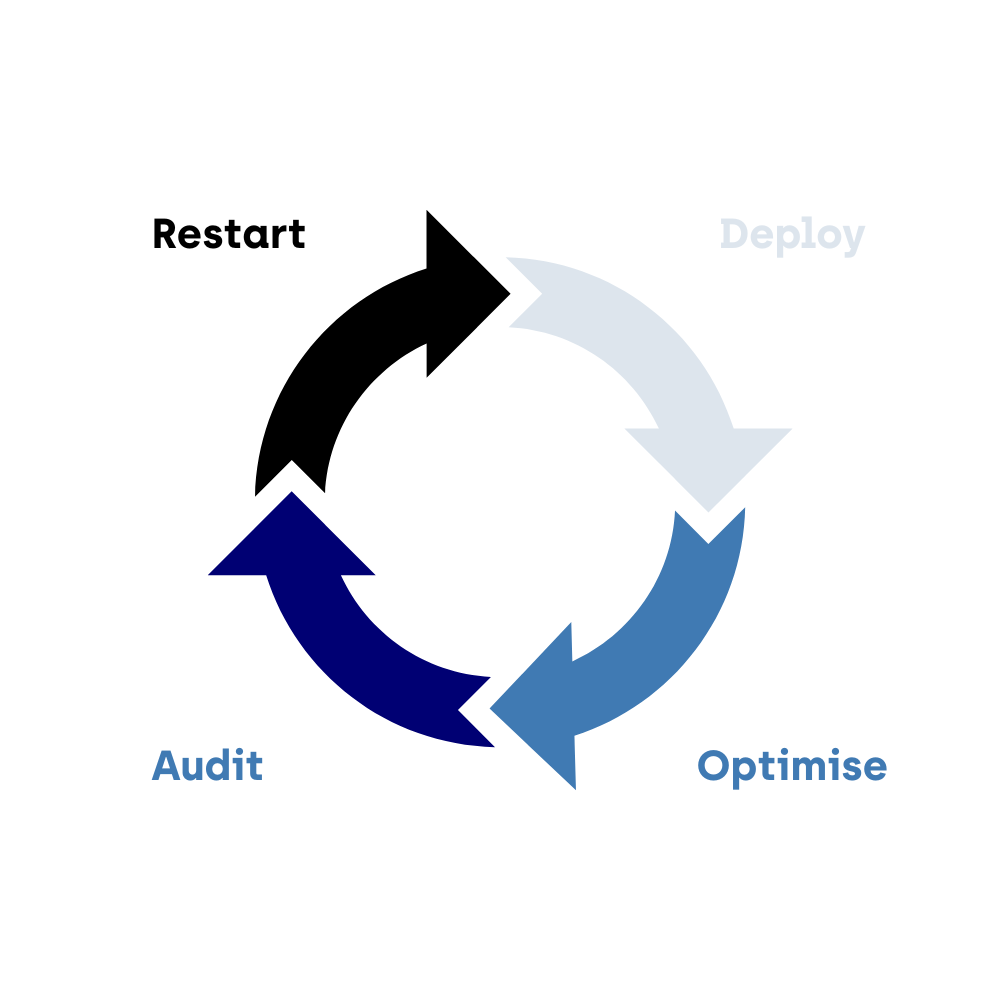

AI governance must be designed as a living architecture, capable of scaling alongside the company and its AI initiatives.

Equipping AI Governance Without Over-complicating

Three key tool families stand out:

- Data Governance & Data Quality: Cataloguing, traceability, and GDPR/AI Act compliance.

- Model Governance & MLOps: Lifecycle tracking, bias detection, and performance drift monitoring.

- Audit & Compliance: Automated documentation and transparent reporting for stakeholders.

Integration with Business Processes

AI governance fails when it remains confined to the IT department. It must be operational for business units: integrated into their workflows, with validation steps included from the design phase (Compliance by Design), and supported by relevant KPIs.

Conclusion

Implementing effective AI governance is not merely a matter of regulatory compliance: it is a strategic lever to ensure the trust, performance, and long-term viability of AI projects. By building on these three concrete prerequisites, companies can transform AI governance into a competitive advantage rather than a constraint.

To explore this topic further, we invite you to watch our MARGO x IBM webinar: AI Governance – Transforming Regulation into Opportunity, hosted by Hugo PRIGNOL and Ludovic MAGNIER (Co-Directors of MARGO AI Solutions) along with M’Hamed Benali (Data & AI Brand Technical Specialist at IBM). The speakers present practical insights on how to structure AI governance, manage risks, guarantee compliance, and deploy AI use cases at scale.

In this session, you will discover:

- How to establish an organisational structure and AI committees tailored to your company.

- Best practices for securing your data and frameworks, while ensuring traceability and quality.

- Tools and methods for monitoring model performance, detecting bias, and managing the AI model lifecycle.

- Concrete examples of integrating AI governance into business workflows and process optimisation.

Do not miss this opportunity to turn regulation into a driver of trust and innovation for your AI projects.

▶️ WEBINAR – AI Governance: Transforming Regulation into OpportunityMARGO Support

At MARGO, we believe that effective AI governance is more than just regulatory compliance: it must become a true strategic lever. Through our entity MARGO AI Solutions, we support companies in structuring their organisation, securing and tracing data, and integrating suitable tools to monitor and manage their AI projects.

An AI Governance project?

What is AI governance?

AI governance encompasses the set of processes, rules, and responsibilities that frame the development, deployment, and monitoring of AI models. It aims to ensure regulatory compliance, security, ethics, and value creation for the company.

Is AI governance indispensable?

Without governance, AI projects risk generating algorithmic biases, compliance issues (GDPR, AI Act), or a loss of user trust. Solid governance reduces risks, accelerates adoption, and transforms regulation into a competitive advantage.

Does AI governance only concern large companies?

No. All organisations, regardless of size, should define a minimal governance framework. A startup can begin by appointing an AI lead and documenting practices, while a large corporation will implement an AI committee, regular audits, and advanced monitoring tools.

Which tools facilitate AI governance?

Several tool families support governance:

- Data Governance & Quality (data catalogs, traceability).

- Model Governance & MLOps (lifecycle tracking, bias detection).

- Audit & Compliance (solutions like IBM watsonx.governance, automated documentation).

The key is to deploy them progressively, based on the organisation’s maturity and its most sensitive use cases.